|

First step was to amalgamate all of the not-closed protocols into a list, since the methods for eliminating a protocol would be the same across the various systems (though not so much as I had hoped, as will be explained below):

1 open icmp 2 open|filtered igmp 4 open|filtered ipv4 6 open tcp 17 open udp 41 open|filtered ipv6 47 open|filtered gre 69 open|filtered sat-mon 90 open|filtered sprite-rpc 102 open|filtered pnni 103 open|filtered pim 136 open|filtered udplite 255 open|filtered unknown

The necessary protocols which I was familiar with are ICMP (ping, &c.), TCP, and UDP. The rest would require investigation.

First step was to enable both CONFIG_IP_NF_IPTABLES and CONFIG_IP_NF_TARGET_REJECT in the kernel (then recompile, reinstall, &c.). Next step was to disable the protocol with iptables -A INPUT -p udplite -j REJECT, but a subsequent rescan now showed the protocol as open! Reading the iptables-extensions(8) manpage revealed that the default error message sent by the IPv4 REJECT target is icmp-port-unreachable; this would explain why the protocol itself was reported as open: the rejection is only sent when trying to connect to a specific port within the protocol. Further reading showed that issue could be remedied by adding the --reject-with icmp-proto-unreachable option to the command, giving iptables -A INPUT -p udplite -j REJECT --reject-with icmp-proto-unreachable. Running this gave the desired result of closed, but, since this was not a hard-disable at the kernel level, a few additional steps had to be taken to persist this change. On Gentoo, the following series of commands did the trick: rc-service iptables save, rc-service iptables start, and rc-update add iptables default; simple enough! For my LibreCMC router, I added the aforementioned iptables command to the file /etc/firewall.user. A reboot and rescan on the respective machines showed that these steps gave the desired result.

There remains the fundamental question of whether or not the additional complexity involved in disabling the protocol outweighs the benefits from disabling the protocol; perhaps it would have been better to come up with a method for patching the kernel and thus removing the additional netfilter complexity from the non-router systems. Perhaps, but netfilter is what I used this time around.

My first inclination was to try opening the router in order to see if I could flash it. While the top of the router looked easily removable, there was some kind of unexpected resistance near the back of the router which I hadn't been able to figure out on previous attempts. Turns out the trick was to remove the... friction dohickies on the bottom the router, exposing screws which kept the top in place:

|

With the board exposed, I tried searching for an obvious serial connection, though nothing definite stuck out at me. Some searching turned up this page and, more specifically, this board configuration, although none of them quite matched my board:

At this point a multitude of problems struck me. First, the board required a certain type of serial connector. I thought I ordered one when I got the board, but I couldn't find it, so perhaps I was mis-remembering. Next, I had no knowledge of how to determine the exact pinout besides extrapolating from the other images of pinouts, a risky bet, and I quite probably did not even have the required equipment. Last, I'd have to solder the pins in order to connect them, which would add extra difficulty to the task. Since there was still some kind of life in the board, I decided to see if there was some other way to fix it.

There were two known states which I was able to put the board in. The first, as mentioned before, was to simply power on the board, which would cause the "PWR" light to turn on and the "SYS" light to flash, then begin flashing "rapidly" ad infinitum. The second state was obtained by holding down the "WPS/Reset" button during power on; this would cause the "PWR" light to turn on and then all lights to flash about once a second for around 15 seconds before leaving just the "PWR" light on. Yet I had no idea what either of these statuses meant, nor how to take advantage of them. A number of pages mentioned the router could pull images from TFTP while in some kind of failsafe mode. In order to see if this method was viable, I followed the advice of trying to listen for a TFTP request by setting up a static IP address on another machine and then monitoring network traffic with tcpdump -Ani enp5s4; what I found was that the second state (holding down the "WPS/Reset" button during power on) would generate the following traffic when the lights would stop blinking:

-------- 22:52:54.119282 ARP, Request who-has 192.168.1.2 tell 192.168.1.1, length 46 ........df...*................................ 22:52:54.119327 ARP, Reply 192.168.1.2 is-at 00:14:d1:24:88:f0, length 28 ...........$......df...*.... 22:52:54.119368 IP 192.168.1.1.6666 > 192.168.1.2.6666: UDP, length 29 E..9..@...._......... . .%..U-Boot 1.1.4 (Jul 28 2014) 22:52:54.119399 IP 192.168.1.2 > 192.168.1.1: ICMP 192.168.1.2 udp port 6666 unreachable, length 65 E..UJ...@...................E..9..@...._......... . .%..U-Boot 1.1.4 (Jul 28 2014) 22:52:54.119453 IP 192.168.1.1.6666 > 192.168.1.2.6666: UDP, length 7 E..#..@....t......... . ....uboot> ........... 22:52:54.119465 IP 192.168.1.2 > 192.168.1.1: ICMP 192.168.1.2 udp port 6666 unreachable, length 43 E..?J...@..$..........O.....E..#..@....t......... . ....uboot> 22:52:59.149647 ARP, Request who-has 192.168.1.1 tell 192.168.1.2, length 28 ...........$................ 22:52:59.149748 ARP, Reply 192.168.1.1 is-at 64:66:b3:9d:17:2a, length 46 ........df...*.......$........................ --------

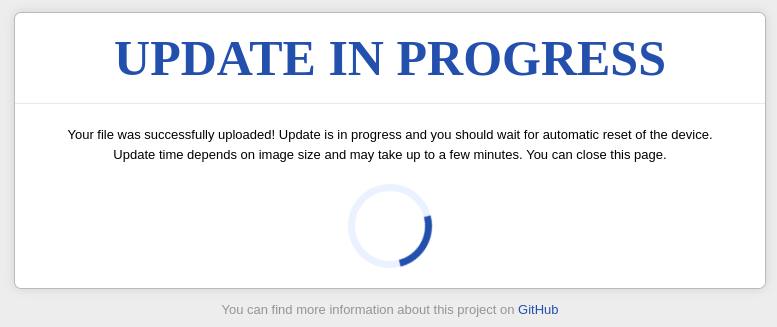

This looks to be some kind of U-boot CLI prompt, but to port 6666? Over UDP? Huh?! Other pages mentioned emergency recovery over telnet while others mentioned recovery over HTTP, but nothing about what I was seeing. I never did figure out the mysterious packets, but the last section from the previous link as well as some inspiration from previously reading OpenWRT's failsafe mode ended up saving the day. The trick was to hold the "WPS/Reset" button, power on the modem, and then rapidly mash the "WPS/Reset" button; all of the non-power lights when then blink very rapidly for about 5 times and then just the "PWR" button would stay lit (this state would not be triggered by simply holding the "WPS/Reset" button). After guessing this trick, to my great joy I was able to navigate to a webpage at 192.168.1.1 and upload a known-good sysupdate image:

|

After the uploaded completed, the router was rebooted and began working as expected. A few weeks later when I had recovered from the ordeal and felt brave again, I re-enabled IPv6 while leaving multicast disabled and compiled a new image, which, when flashed, worked as expected. My plan for killing two birds with one stone had failed, but at least I was able to kill one bird with one stone by chasing it halfway around the world. Or something. The analogy kind of breaks down there.

Arguably, none of this is related to a blog ostensibly about disabling IP protocols, but it was the harsh reality of what it actually took to disable the protocol.

/usr/lib/gcc/armv7a-unknown-linux-gnueabihf/11.2.0/../../../../armv7a-unknown-linux-gnueabihf/bin/ld: scripts/dtc/dtc-parser.tab.o:(.bss+0x8): multiple definition of `yylloc'; scripts/dtc/dtc-lexer.lex.o:(.bss+0x1c): first defined here

This wasn't the first time a previously-working kernel began failing to compile, so without much effort I found commit d047cd8a2760f58d17b8ade21d2f15b818575abc which seemed to address the issue. Yet when I modified the Novena sources ebuild in order to apply the patch, I wound up with the following error:

Applying 1004-scripts-dtc-Remove-redundant-YYLOC-global-declaratio.patch (-p1) ... [ ok ] Failed to dry-run patch 1005-scripts-dtc-Update-to-upstream-version-v1.6.0-2-g87a.patch Please attach /var/tmp/portage/sys-kernel/novena-sources-4.7.2-r5/temp/1005-scripts-dtc-Update-to-upstream-version-v1.6.0-2-g87a.err to any bug you may post. ERROR: sys-kernel/novena-sources-4.7.2-r5::novena failed (unpack phase): Unable to dry-run patch on any patch depth lower than 5. Call stack: ebuild.sh, line 127: Called src_unpack environment, line 1812: Called kernel-2_src_unpack environment, line 1408: Called unipatch ' /var/tmp/portage/sys-kernel/novena-sources-4.7.2-r5/distdir/genpatches-4.7-5.base.tar.xz /var/tmp/portage/sys-kernel/novena-sources-4.7.2-r5/distdir/genpatches-4.7-5.extras.tar.xz /var/tmp/portage/sys-kernel/novena-sources-4.7.2-r5/distdir/novena-kernel-patches-4.7.2-r3.tar.gz' environment, line 2729: Called die The specific snippet of code: die "Unable to dry-run patch on any patch depth lower than 5.";

...patch depth? Dry-run? Huh?! I took a look at the kernel eclass and found the following logic:

if [[ ${PATCH_DEPTH} -eq 5 ]]; then

eerror "Failed to dry-run patch ${i/*\//}"

eerror "Please attach ${STDERR_T} to any bug you may post."

eshopts_pop

die "Unable to dry-run patch on any patch depth lower than 5."

fi

I wasn't really sure what to make of this. What is patch depth? Why should I care about a dry run? Just apply the damn patch! Given my previous trial with my router I decided that I'd hit my tolerance for mental pain on what was supposed to not be a major undertaking and declare my Novena deprecated, especially as its form factor just isn't useful for a laptop. I've little doubt that the CONFIG_NET_IPGRE option choice was correct, as for the unknown protocol, who knows! I didn't even try to investigate the protocol as it seemed possible I'd only find a hypothesis I'd be unable to test without a bunch of additional work. Perhaps one day I'll feel ambitious enough to fix this and will add a subsection on the solution.